Learning, this concept, has completely changed in the AI era.

Author: Jin Guanghao

Not long ago, I attended an AI gathering in Shanghai.

The event itself discussed a lot of AI implementation content.

But what impressed me the most was a learning method shared by a senior investor.

He said this method saved him and changed his standards for evaluating people in investments.

What is it specifically? It is to learn to "ask questions."

When you are interested in a question, go and chat with DeepSeek, keep chatting until it can’t answer anymore.

This technique of "infinite questioning" was quite shocking to me at the time, but after the event, I put it out of my mind.

I didn’t try it, nor did I think about it.

Until recently, I came across the story of Gabriel Petersson, who dropped out of school and learned through AI to enter OpenAI.

I suddenly realized what that senior meant by "asking to the end" in this AI era.

Gabriel Interview Podcast | Image Source: YouTube

01 "High School Dropout," Rising to Become an OpenAI Researcher

Gabriel is from Sweden and dropped out of high school.

Gabriel's social media homepage | Image Source: X

He once thought he was too stupid to work in AI-related jobs.

The turning point happened a few years ago.

His cousin started a startup in Stockholm, creating an e-commerce product recommendation system, and asked him to come help.

Gabriel went there without much technical background or savings, and during the early days of the startup, he even slept on the sofa in the company’s public lounge for a whole year.

But during that year, he learned a lot. Not from school, but forced out by the pressure of real problems: programming, sales, system integration.

Later, to optimize his learning efficiency, he simply became a contractor, allowing him to choose projects more flexibly, specifically seeking to collaborate with the best engineers and actively seeking feedback.

When applying for a U.S. visa, he faced an awkward problem: this type of visa requires proof of "extraordinary ability" in the field, usually needing academic publications, paper citations, and similar materials.

How could a high school dropout have these?

Gabriel came up with a solution: he organized high-quality technical posts he published in programmer communities as a substitute proof of "academic contribution." This plan was surprisingly accepted by the immigration office.

After arriving in San Francisco, he continued to self-learn mathematics and machine learning using ChatGPT.

Now he is a research scientist at OpenAI, participating in the construction of the Sora video model.

Speaking of this, you must be curious about how he did it.

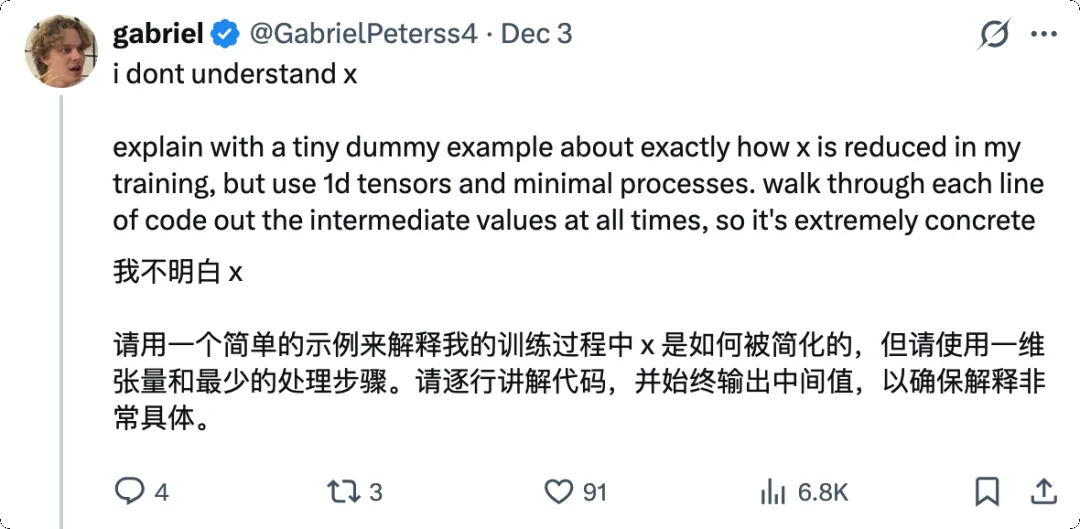

Gabriel's Perspective | Image Source: X

02 Recursive Knowledge Filling: A Counterintuitive Learning Method

The answer is "infinite questioning," finding a specific problem, and then thoroughly solving it through AI.

Gabriel's learning method is contrary to most people's intuition.

The traditional learning path is "bottom-up": first build a foundation, then learn applications. For example, to learn machine learning, you must first learn linear algebra, probability theory, calculus, then statistical learning, and finally deep learning before you can tackle real projects. This process can take several years.

His method is "top-down": start directly from a specific project, solve problems as they arise, and fill in knowledge gaps as they are discovered.

He mentioned in the podcast that this method was hard to promote before because you needed an all-knowing teacher to tell you "what to fill in next" at any time.

But now, ChatGPT is that teacher.

Gabriel's Perspective | Image Source: X

How does he operate? He gave an example: how to learn diffusion models.

Step one, start with the macro concept. He would ask ChatGPT: "I want to learn about video models, what is the core concept?" The AI tells him: autoencoders.

Step two, code first. He asks ChatGPT to write a piece of code for a diffusion model directly. At first, he doesn’t understand many parts, but that’s okay; he runs the code first. If it runs, he has a basis for debugging.

Step three, the most crucial part, is to conduct recursive questioning; he focuses on every module in the code and asks questions.

He drills down layer by layer until he thoroughly understands the underlying logic. Then he returns to the previous layer and continues to ask about the next module.

He calls this process "recursive knowledge filling."

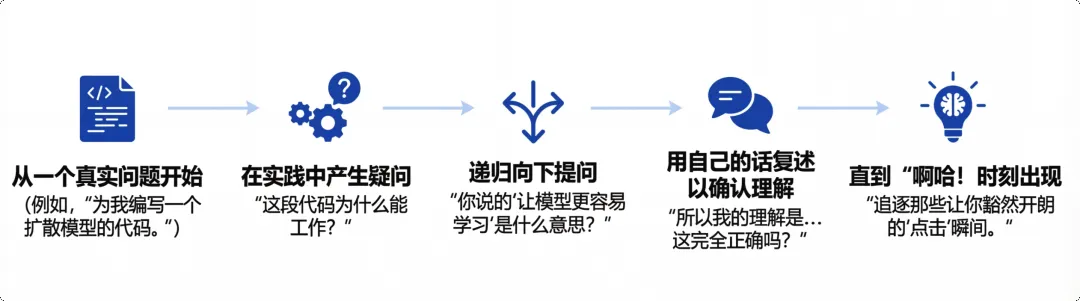

Recursive Knowledge Filling | Image Source: nanobaba2

This is much faster than learning step by step for six years; it might take just three days to establish a basic intuition.

If you are familiar with the Socratic method of questioning, you will find that this is essentially the same idea: approaching the essence of things through layers of questioning, where each answer is the starting point for the next question.

Only now he treats AI as the one being questioned, and since AI is almost all-knowing, it continuously expresses the essence of things to the questioner in an easily understandable way.

In fact, Gabriel uses this method to perform "knowledge extraction" from AI, learning the essence of things.

03 Most of Us Using AI Are Actually Getting Dumber

After listening to the podcast, Gabriel's story raised a question for me:

Why is it that while using AI, he can learn so well, but many people feel they are regressing after using AI?

This is not just my subjective feeling.

A paper from Microsoft Research in 2025 shows that when people frequently use generative AI, their use of critical thinking significantly declines.

In other words, we are outsourcing our thinking to AI, and our own thinking ability is shrinking along with it.

Skills follow the principle of "use it or lose it": when we use AI to write code, our ability to write code with our hands and minds quietly deteriorates.

The "vibe coding" work style with AI seems highly efficient, but in the long run, programmers' own coding skills are declining.

You throw the requirements to AI, it spits out a bunch of code, you run it, and feel great. But if you turn off AI and try to write the core logic by hand, many people find their minds completely blank.

More extreme cases come from the medical field; a paper in the medical field pointed out that after introducing AI assistance, doctors' skills in colonoscopy detection declined by 6% within three months.

This number may not seem large, but think about it: this is real clinical diagnostic ability, concerning patients' health and lives.

So the question arises: with the same tools, why do some people become stronger while others become weaker?

The difference lies in how you treat AI.

If you treat AI as a tool to do your work, letting it write code for you, write articles for you, make decisions for you, then your abilities will indeed decline. Because you skip the thinking process and only get the results. Results can be copied and pasted, but thinking ability does not grow out of thin air.

But if you treat AI as a coach or mentor, using it to test your understanding, question your blind spots, and force yourself to clarify vague concepts: then you are actually using AI to accelerate your learning loop.

Gabriel's method is not about "letting AI learn for me," but "letting AI learn with me." He is always the one actively asking questions, and AI merely provides feedback and materials. Every "why" is a question he asks himself, and every layer of understanding is something he digs into himself.

This reminds me of an old saying: teaching a man to fish is better than giving him a fish.

Recursive Knowledge Filling | Image Source: nanobaba2

04 Some Practical Insights

At this point, some may ask: I'm not doing AI research, nor am I a programmer; how is this method useful to me?

I believe Gabriel's methodology can be abstracted into a more general five-step framework that anyone can use to learn any unfamiliar field through AI.

- Start from real problems, rather than from the first chapter of a textbook.

Whatever you want to learn, start doing it directly, and fill in gaps when you hit a snag.

The knowledge learned this way has context and purpose, making it much more effective than memorizing concepts in isolation.

Gabriel's Perspective | Image Source: X

- Treat AI as a patient mentor.

You can ask it any silly questions, have it explain the same concept in different ways, or ask it to "explain like I'm five."

It won’t laugh at you or get impatient.

- Actively question until you establish intuition. Don’t settle for superficial understanding.

Can you restate a concept in your own words? Can you give an example not mentioned in the original text?

Can you explain it to a layperson? If not, keep asking.

- There’s a trap to be wary of: AI can also produce hallucinations.

When conducting recursive questioning, if the underlying concept is explained incorrectly by AI, you may end up going further down the wrong path.

So it’s advisable to cross-verify at key points using multiple AIs to ensure the foundation of your questions is solid.

- Document your questioning process.

This can form reusable knowledge assets: the next time you encounter a similar problem, you have a complete thought process to review.

In traditional views, the value of tools lies in reducing friction and increasing efficiency.

But learning is quite the opposite: moderate resistance and necessary friction are actually prerequisites for learning to occur. If everything is too smooth, the brain enters a low-effort mode and retains nothing.

Gabriel's recursive questioning is essentially about creating friction.

He keeps asking why, constantly pushing himself to the edge of not understanding, and then gradually filling in the gaps.

This process is quite uncomfortable, but it is precisely this discomfort that allows knowledge to truly enter long-term memory.

05 Future Career Trends

In this era, the monopoly of academic credentials is being broken, but the cognitive threshold is invisibly rising.

Most people only treat AI as an "answer generator," while a very small number of people like Gabriel treat AI as a "thinking exerciser."

In fact, similar uses have already appeared in different fields.

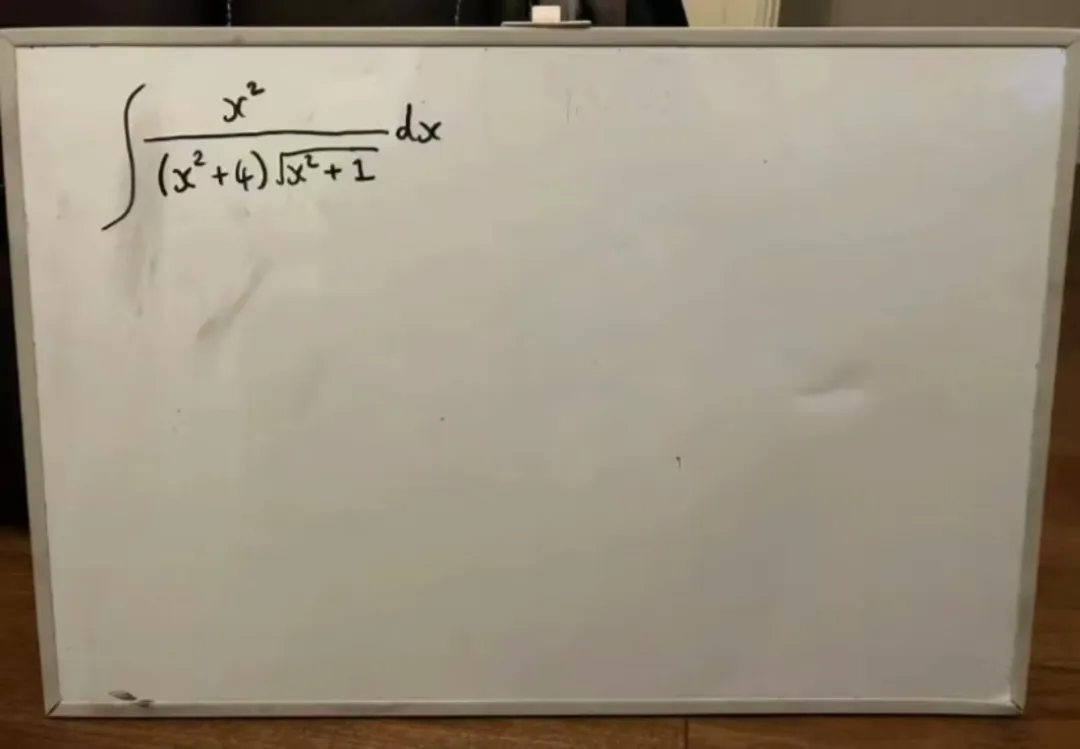

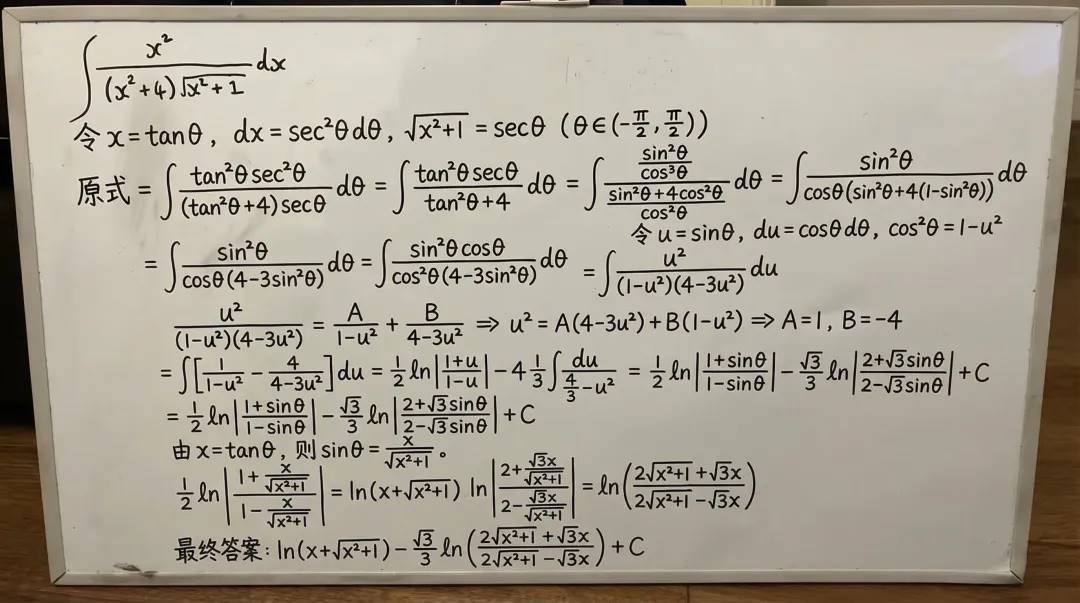

For example, on Jike, I see many parents using nanobanana to tutor their children. But they don’t let AI provide direct answers; instead, they have AI generate problem-solving steps, showing the thought process step by step, and then analyze the logic of each step together with their children.

This way, what the children learn is not the answer, but the method of solving problems.

Prompt: "Solve the given integral and write the complete solution on the whiteboard" | Image Source: nanobaba2

Some people use the features of Listenhub or NotebookLM to turn long articles or papers into podcast formats, allowing two AI voices to converse, explain, and ask questions. Some think this is lazy, but others find that after listening to the conversation and then going back to read the original text, their understanding efficiency is actually higher.

Because during the conversation, questions are naturally thrown out, forcing you to think: Do I really understand this point?

Gabriel Interview Podcast to Podcast | Image Source: notebooklm

This points to a future career trend: being multi-skilled.

In the past, if you wanted to create a product, you needed to understand front-end, back-end, design, operations, and marketing. Now, you can quickly master 80% of the knowledge in your weak areas using the "recursive filling" method like Gabriel.

You might originally be a programmer, and by using AI to fill in design and business logic, you can become a product manager.

You might originally be a good content creator, and through AI, you can quickly fill in your coding skills gap and become an independent developer.

Based on this trend, it can be inferred: "Perhaps, in the future, there will be more forms of 'one-person companies' emerging."

06 Reclaim Your Initiative

Now, thinking back to what that senior investor said, I finally understand what he really meant.

"Keep asking until it can't answer anymore."

This phrase is a great mindset in the AI era.

If we only settle for the first answer given by AI, we are silently regressing.

But if we can push AI to explain the logic thoroughly through questioning and then internalize it into our own intuition: then AI truly becomes our external aid, rather than us becoming AI's appendage.

Don’t let ChatGPT think for you; let it accompany you in thinking.

Gabriel went from a dropout sleeping on a sofa to an OpenAI researcher.

There’s no secret in between, just thousands of recursive questions.

In this era filled with anxiety about being replaced by AI, the most practical weapon might just be:

Don’t stop at the first answer; keep asking.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。