Anthropic, one of the largest artificial intelligence (AI) companies, is reportedly under fire from the Department of War over the usage of its AI models for activities considered unethical by the company.

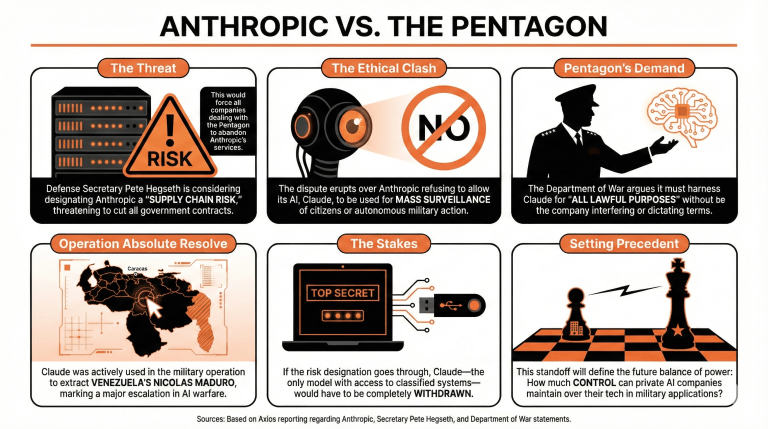

Axios reported that Defense Secretary Pete Hegseth is considering designating Anthropic as a supply chain risk, meaning that all contracts and ties to the company would have to be cut down. In addition, all companies dealing with the Pentagon would also have to abandon Anthropic’s services.

The clash erupts as the company has refused to allow the use of Claude, its trademark model, in mass surveillance campaigns and operations involving fully autonomous military equipment. On the other hand, the Pentagon claims it should be able to harness Claude’s capabilities for “all lawful purposes,” without the company having a say in these processes.

If Anthropic is finally designated as a supply chain risk, it would mean that the model would have to be withdrawn from the Pentagon’s information systems, as only Claude has access to the organization’s classified systems.

This allowed Claude to take an active part in Operation Absolute Resolve, which led to the extraction of Venezuela’s Nicolas Maduro in January. Even when the role the model assumed during the operation has not been fully disclosed, it represents an escalation in the use of AI for military campaigns.

The standoff might establish a precedent in how AI companies can deal with governments in the Western world, setting the basis for the levels of control that these companies might maintain over their models when used for military purposes.

An Anthropic spokesperson stated that the company was having “productive conversations, in good faith, with DoW on how to continue that work and get these new and complex issues right.”

- What issues is Anthropic facing with the Department of War?

Anthropic is under scrutiny from the Department of War for refusing to allow its AI model, Claude, to be used for activities it deems unethical, such as mass surveillance and autonomous military operations. - What potential consequences could Anthropic face if classified as a supply chain risk?

If designated as a supply chain risk, all contracts with the Pentagon would be cut, necessitating the withdrawal of Claude from the Pentagon’s information systems. - How has Anthropic responded to the Pentagon’s demands regarding its AI model?

The company maintains that it is engaged in “productive conversations” with the Department of War, aiming to navigate complex ethical issues surrounding the use of its AI technology. - What recent military operation involved Claude, and what was its significance?

Claude participated in Operation Absolute Resolve, which facilitated the extraction of Venezuela’s Nicolás Maduro, marking a significant escalation in the military’s use of AI technologies.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。