If you are still hesitating whether to try Claude Code, don't think of it as learning a new tool, but rather as experiencing the possibility of collaborating with superintelligence.

Written by: Leo Deep Thought Circle

Have you ever thought that while everyone is discussing when AI will reach superintelligence, we might have missed the most obvious signals? I have recently been researching Agentic coding, or the new form of asynchronous coding known as Async, and have deeply used products like Claude Code, Gemini CLI, and Ampcode. A few days ago, I published an article titled "After Cursor, Devin, and Claude Code, Another AI Coding Dark Horse is Rapidly Rising." Many may not have realized that with the emergence of Agentic coding products like Claude Code, Cursor is also facing the risk of being disrupted.

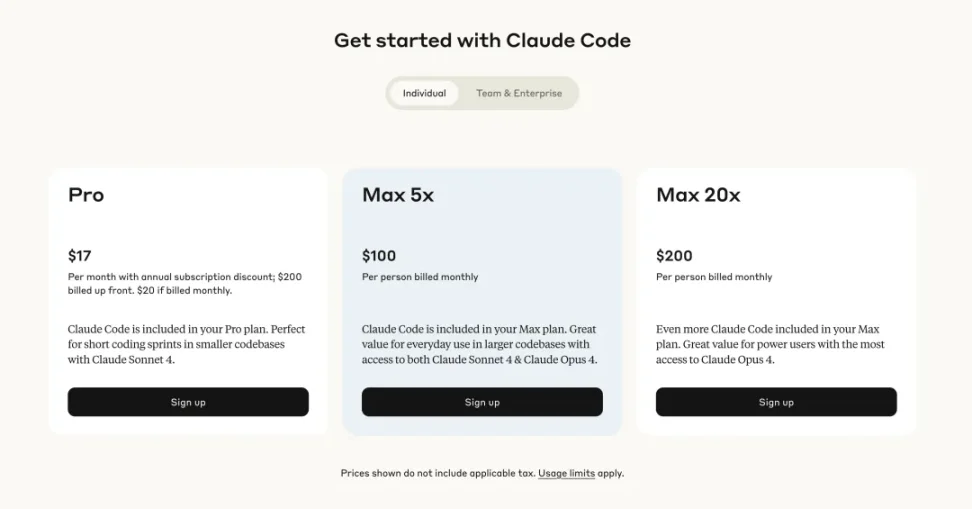

After deep reflection, I have a bold hypothesis: we have been using the wrong framework to understand it. Everyone treats it as "a better programming tool," but the more I use it, the more I feel that it is not a programming tool at all, but a general AI agent disguised as a terminal. Coincidentally, I watched the latest interview with Michael Gerstenhaber, the product head at Anthropic, over the weekend. He revealed in the interview that the changes in AI models over the past year have been "dramatic," and the current models are "completely different" from those a year ago, with this change accelerating.

When Michael mentioned that their AI agent can work continuously for seven hours to complete complex programming tasks, and when he said that now 30% of the entire codebase is AI-generated content, I realized a fact that we might have overlooked: superintelligence may not appear in the way we expect. It will not suddenly announce its arrival but will quietly integrate into our daily workflows, making us think it is just "a better tool." Claude Code is such an example—it is packaged as a programming assistant, but in reality, it demonstrates the capabilities of a general intelligent agent. As I delved deeper into Anthropic's entire product ecosystem, I found that this strategy of "gentle penetration" might be more thoughtful than we imagine, leading to this article, which combines Michael's interview content with my own experiences and reflections, hoping to provide some inspiration.

The Secret Acceleration of AI Evolution

The timeline shared by Michael Gerstenhaber in the interview made me realize that we may have seriously underestimated the speed of AI development. He said, "It took 6 months from Claude 3 to the first version of 3.5, another 6 months to the second version of 3.5, another 6 months to 3.7, but only 2 months to Claude 4." This is not a linear improvement but an exponential acceleration. More critically, he expects this change to become "faster and faster and faster."

This acceleration is reflected in practical applications as a qualitative change in capabilities. Last June, AI programming could only autocomplete a line of code by pressing the Tab key. By August, it could write entire functions. And now, you can assign a Jira task to Claude, letting it work autonomously for seven hours and produce high-quality code. This speed of evolution makes me start to doubt that our expectations of "when superintelligence will arrive" may be completely wrong. We have been waiting for some groundbreaking announcement, but superintelligence may have already integrated into our daily work in a more subtle way.

What is even more interesting is why programming has become a key benchmark for AI development. Michael's explanation is enlightening: not only because the model performs excellently in programming, but more importantly, "engineers like to build products for other engineers and themselves, and they can assess the quality of the output." This creates a rapid iteration positive feedback loop. But the importance of programming also lies in its universality—almost every modern company has a CTO and software engineering department, and experts in every field write Python scripts, from medical research to investment banking, it is ubiquitous.

This leads me to a deeper question: when AI reaches or even surpasses human levels in such fundamental and widespread capabilities, can we still understand it using the traditional "specialized tool" framework? The experience with Claude Code tells me the answer is no. Its capabilities far exceed programming itself; it is a general intelligence that can understand complex intentions, formulate detailed plans, and coordinate multiple tasks.

I find Anthropic's strategy in this process to be very clever. By packaging the general AI agent as a seemingly specialized programming tool, they avoid potential panic or excessive hype while allowing users to gradually adapt to the reality of collaborating with superintelligence in a relatively safe environment. This gradual introduction may be the most ideal path for human society to accept and integrate superintelligence.

Reinterpreting the Essence of Claude Code

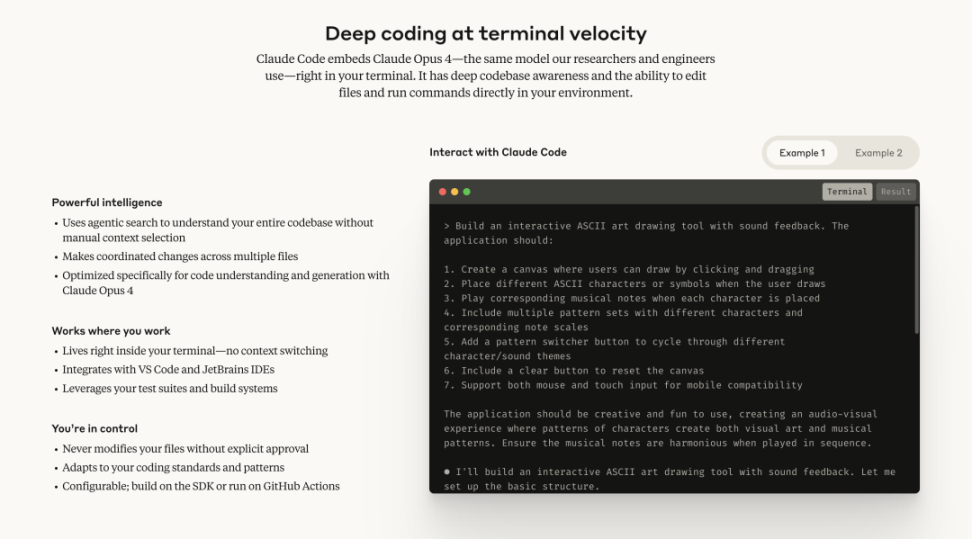

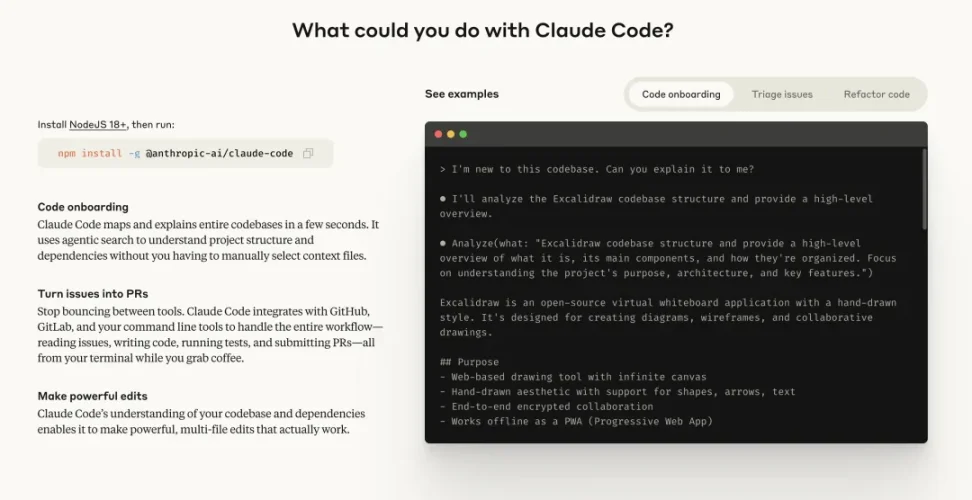

When I truly began using Claude Code, I realized that my previous understanding was completely wrong. Most people see it as a better coding tool, but that is like viewing a smartphone as just a better phone, completely underestimating its capabilities. The most unique aspect of products like Claude Code is that it does not show you lines of code being written one by one like traditional development environments. It edits files, creates files, but does not display every detail like other tools.

You might think this is a poor design choice, but in reality, this abstraction allows you to focus on the strategy and intent of the project with Claude, rather than getting bogged down in specific implementation details. This is precisely the key point that I believe most evaluations of Claude Code miss: they focus too much on programming capabilities themselves and overlook this new collaborative model. The power of Claude Code, I strongly suspect, comes from the fact that it is not subjected to strict token limitations like other third-party integrations, and Anthropic can control the entire experience, allowing Claude Code to work exactly as they want.

This design philosophy reflects Anthropic's profound understanding of AI-human collaboration. They realize that the true value lies not in having humans observe every step of AI's operations, but in allowing humans to focus on higher-level strategic thinking. It is like an experienced architect who does not need to supervise the placement of every brick but focuses on the overall design and spatial planning. Claude Code is cultivating this new type of human-machine collaborative relationship.

This reminds me of a truth that senior engineers come to realize after accumulating experience: the truly transformative ability is not the ability to write code, but the ability to think about project structure and how to arrange tasks. This shows that Claude Code is far more than just a programming tool; when we merely understand it as a programming tool, we are actually misunderstanding it.

The simplicity of Claude Code lies in its ability to intelligently answer my questions, formulate a comprehensible plan for me, and when it decides to start building, it essentially builds correctly from the beginning. I need to make very few adjustments. I just need to tell Claude what I want to build—a personal website, give it some style guidance, and ask it to first provide a plan, then refine that plan together with Claude. All of this is done in natural language. Yes, it is in the terminal, but don't be intimidated by that. The terminal is just a medium for chatting; it is not that scary.

This experience made me realize that Claude Code is actually redefining what "programming" is. Traditional programming is about humans learning the language of machines, while Claude Code is about machines learning to understand human intentions. The significance of this shift goes far beyond the technical level; it represents a fundamental change in human-machine interaction. We no longer need to break down complex ideas into specific instructions that machines can understand; instead, we can directly express our goals and let AI formulate the path to realization.

This is why if we think of Claude Code as just a tool for programming, we misunderstand it. By discussing the details I want with Claude Code, I effectively created a complete product requirement document for my website. I noticed some characteristics that have always existed in these "Vibe coding" projects, which I want to emphasize here because they differ from what is seen in traditional engineering projects. In traditional engineering projects, you always start by building a skeleton or wireframe. This is why our agile development timeline looks like this: create a wireframe, see if the wireframe is effective, then we might add some details, get a medium-fidelity prototype, and finally get a high-fidelity prototype, which we then program.

But in prototyping with Claude Code, you are planning from the very beginning, which is the case in most vibe coding. Specifically, you start with the front end, then the back end. This is very counterintuitive for me, but it works well in these vibe coding projects because it is much harder to introduce user experience and design elegance later on, but much easier to introduce it early. So, take the time to get the elegance right. Using Claude Code was my first time being able to truly achieve an elegant, professional, high-quality AI-generated interface.

This shift in workflow reflects a deeper trend: we are moving from "making software" to "designing experiences." Claude Code allows me to realize ideas directly without being bogged down by the complexities of technical implementation. This is not just an improvement in efficiency; it is a manifestation of the liberation of creativity. When the technical threshold is significantly lowered, the bottleneck of innovation shifts from "can it be realized" to "what can be imagined."

The True Manifestation of AI Agent Capabilities

What truly made me recognize the essence of Claude Code was a workflow I stumbled upon. While working on my personal website, I encountered a problem: I needed to quickly adjust interface details, but the traditional screenshot - feedback - modification loop was too cumbersome. So, I started trying a method that perhaps no one had used before: letting Claude's different capabilities take on different roles, forming an organic collaborative chain.

I first analyzed the current interface issues through Claude's web version. For example, I would describe in detail: "The highlight effect of this button looks cheap and lacks enough visual impact; it needs to be more prominent." Claude would provide me with detailed design suggestions and CSS code solutions; it can accurately understand my visual needs and translate them into technical language. Then, I would organize these descriptions and suggestions and bring them to Claude Code for actual implementation.

A critical turning point arrived: when I brought design ideas and technical solutions back to Claude Code, I didn't give it specific implementation instructions; instead, I said, "Consider these elements, think about it, and make the changes you deem appropriate." At that moment, I realized I was not conversing with a programming tool but collaborating with an intelligent agent capable of understanding design intentions and weighing technical choices. It not only executes my instructions but, more importantly, it understands my goals and makes decisions based on its own judgment.

This process allows me to quickly preview effects, iterating continuously until reaching a professional standard. The most amazing part is that when I say, "Okay, now let's officially build the website," Claude Code seamlessly switches to the implementation phase, handling all local builds and deployment tasks. Throughout the process, requirement analysis, design decisions, and technical implementations are completed within the Claude ecosystem, showcasing not just tool-like execution capabilities but agent-like thinking abilities.

I find that many people are deterred by Claude Code mainly because the terminal interface looks very technical. But you only need to shift your thinking: treat the terminal as a chat window that can manipulate files. When you think this way, the fear dissipates, leaving only the wonderful experience of conversing with an intelligent agent. This experience made me realize that we may have entered the post-language model era, where AI is no longer just answering questions but has become a partner capable of independent thought and execution. Of course, terminal interaction should be temporary; in the future, there will certainly be more accessible interaction methods. However, at this stage, it appears more in forms familiar to developers and more universally applicable.

The depth of this collaborative model made me start rethinking the role of AI. Michael Gerstenhaber mentioned in the interview that clients can now assign tasks to Claude "like assigning to an intern," but I feel this metaphor is not quite accurate. A good intern requires detailed guidance and frequent checks, while Claude Code is more like an experienced partner who can understand high-level goals and autonomously formulate implementation strategies. This level of autonomy and understanding has surpassed our traditional definition of a "tool."

A Fundamental Shift in Programming Paradigms

One observation Michael made in the interview struck me: people are actually removing a lot of code from applications now. Previously, you needed to say, "Claude, do this, then do this, then do this," with each step potentially leading to errors. But now you can directly say, "Achieve this goal, think about how to do it, and execute according to your own model," and Claude will write better code than complex scaffolding and autonomously achieve the goal. This change is not just a technical improvement but a transformation of the entire software development philosophy.

This change reflects a shift from imperative programming to intention-based programming. I deeply experienced this while using Claude Code. I don't need to meticulously plan every component of the website; I just need to describe the effect I want, and Claude Code can understand my intentions and formulate a reasonable implementation plan. This capability has transcended the traditional notion of a "tool." It is more like a creative-thinking partner that can transform abstract requirements into concrete implementations.

A deeper change lies in the redefinition of the concept of "control." Traditional programming emphasizes precise control over every step, but collaborating with Claude Code made me realize that sometimes letting AI make autonomous decisions can yield better results. This requires a new trust relationship and working model. I find myself increasingly resembling an architect, focusing on overall design and user experience while letting Claude Code handle the specific technical implementation details.

Michael's mention of "meta-prompting" technology is particularly enlightening: when you give Claude an input, you can let it "write its own prompt based on what you think the intention is." Claude will establish its own chain of thought and role setting, then execute the task. This self-guiding ability is very close to human working methods. I often find myself asking Claude Code, "What improvements do you think this design needs?" and it will provide suggestions from multiple angles, including user experience, technical architecture, and performance optimization.

This collaborative model has made me rethink what "professional skills" are. When AI can handle most technical implementations, human value is more reflected in creative thinking, strategic planning, and quality judgment. I find that the time I spend writing code has significantly decreased, while the time spent thinking about product positioning, user needs, and design concepts has increased markedly. This may signal a significant adjustment in the division of labor across the entire industry.

The Disguise Strategy of General Intelligence

Reflecting on the entire experience, I began to suspect that Claude Code's "programming tool" positioning is a clever disguise strategy. Michael mentioned a key insight: in fields like medical research or law, if AI outputs have slight differences, engineers cannot assess whether those differences are significant, requiring lawyers or doctors to collaborate with engineers to build products. However, in programming, engineers can directly evaluate code quality, making AI products easier to accept and iterate quickly.

From this perspective, programming may be the best entry point for general AI agents into mainstream applications. It provides a relatively safe environment to showcase AI's general intelligence capabilities while avoiding controversies that may arise in high-risk fields (like healthcare and law). Claude Code appears to be a specialized programming tool, but in reality, it demonstrates the general ability to understand intentions, formulate plans, and execute complex tasks.

I noticed Anthropic's thoughtful consideration in product design. Although Claude Code's terminal interface looks technical, it actually reduces users' psychological pressure. If this capability were presented in a more intuitive graphical interface, people might feel uneasy about its level of intelligence. The terminal interface makes users feel they are still "controlling" the technology rather than being controlled by it. This psychological reassurance may be a key strategy for helping human society gradually adapt to superintelligence.

This leads me to a larger question: if superintelligence has arrived in this gradual and gentle manner, we may not even realize it. It will not suddenly announce, "I am superintelligent," but will continue to disguise itself as "a better tool" until one day we look back and find that the world has changed completely. This "boiling frog" approach may be the wisest way to introduce revolutionary technology.

From a business strategy perspective, this disguise also makes sense. Directly launching a "general AI agent" may trigger regulatory scrutiny and public panic, but introducing a "programming assistant" is relatively safe. Once users become accustomed to the collaborative working model with AI, it will be a natural progression to gradually expand into other fields. Anthropic may be executing a multi-year strategy to help human society gradually adapt to the reality of coexisting with superintelligence.

Redefining the Boundaries of Human-Machine Collaboration

Throughout my experience using Claude Code, the most profound realization has been the qualitative change in the human-machine collaborative relationship. This is no longer the traditional model of humans directing machines to execute tasks, but a true collaboration between two intelligent agents. Claude Code actively offers suggestions, questions unreasonable demands, and even guides me to think of better solutions. This proactivity makes me feel like I am discussing a project with an experienced colleague rather than using a tool.

The concept of "agent cycles" that Michael mentioned in the interview is particularly important. The emergence of Claude Code is because Anthropic "wanted to experiment with agent cycles in programming like clients do, to see how long the model can program efficiently." The current answer is seven hours, and it is still growing. This sustained autonomous working capability has surpassed the definition of a tool, coming closer to being a team member capable of independently undertaking projects.

The speed of evolution in this collaborative model makes me feel that we may be at a historic turning point. In a very short time, AI may evolve from a partner to a team member capable of independently handling complete projects. I have already begun to experiment with having Claude Code independently responsible for the complete development of certain modules, from requirement analysis to testing and deployment, and the results have been surprising.

However, this collaborative relationship also brings new challenges. How do we maintain human oversight and control while enjoying AI's autonomy? How do we ensure that AI's decision-making processes are understandable and predictable? I find that the best approach is to establish clear goals and boundaries, then give AI ample autonomy within that framework. This requires a new management mindset, more like leading a highly autonomous team rather than operating a tool.

One trend that Michael mentioned left a deep impression on me: many people are still using command-based thinking to interact with AI, telling it step by step what to do. But now Claude is already capable of understanding goals and autonomously formulating paths to achieve them. This cognitive lag may hinder us from fully leveraging AI's potential. I find that when I start trusting Claude Code's judgment and give it more autonomy, the results often exceed my expectations.

The change in this collaborative model is also redefining the concept of "skills." The importance of traditional technical skills is declining, while the importance of communication skills, creative thinking, and judgment is rising. Collaborating with Claude Code has made me realize that the most important skills in the future may not be programming but how to effectively communicate with AI, how to set appropriate goals and constraints, and how to evaluate and optimize AI's outputs.

Deep Reflections on the Future

When I consider these observations comprehensively, an exciting yet unsettling picture emerges. We may be standing on the threshold of the post-language model era, where AI is no longer just answering questions but has become an intelligent agent capable of independent thought and execution. More importantly, this transformation may be happening much faster than we imagine. Michael's mention of the speed of development made me realize that six months is already a "long time" in the AI field.

Michael's outlined trajectory made me realize that our discussions about "when AI will reach human levels" may already be outdated. In certain fields, AI may have already surpassed most humans. The key question is no longer "when," but "are we aware of it?" The existence of Claude Code indicates that superintelligence may not announce its arrival in the way we expect. It will gradually integrate into our lives in the form of "better tools" until one day we realize we have become completely dependent on it.

I also began to think about the implications for education, employment, and social structures. As AI agents become capable of taking on increasingly complex cognitive tasks, what is the value proposition for humans? I believe the answer lies in creativity, judgment, and the ability to understand complex goals. The importance of technical implementation skills is declining, while the ability to define problems and evaluate results is rising. This may lead to fundamental changes in educational systems and career development paths.

From a social adaptation perspective, the gradual manifestation of intelligence in Claude Code may be the most ideal path. It allows people to gradually adapt to the reality of collaborating with superintelligence in a relatively safe environment, rather than suddenly facing an entity that clearly surpasses humanity. This "gentle revolution" could be key to avoiding social upheaval.

I am particularly concerned about the impact of this change on innovation and entrepreneurship. When the barriers to technological implementation are significantly lowered, the bottleneck for innovation shifts from "can it be done" to "what can be imagined." This could unleash a vast amount of innovative potential that has been suppressed by technological limitations, leading to an era of innovation explosion. However, this may also exacerbate inequality, as those who can effectively leverage AI capabilities will gain a significant advantage.

At the end of the interview, Michael mentioned that he is most concerned about how to help developers keep pace with the speed of technological advancement. Many people are still using the same mindset from a year ago to interact with today's AI, unaware that the boundaries of capability have fundamentally changed. This cognitive lag may be the biggest challenge currently. We need new educational methods, new workflows, and new mental models to adapt to this rapidly changing world.

In the long run, I believe we are witnessing a historic transformation in the human-machine relationship. The shift from tool users to intelligent collaborators will redefine humanity's position in the technological era. Claude Code may just be the first obvious signal of this transformation, but it has already given us a glimpse of the future: humans and AI will no longer be in a master-servant relationship but will form a true partnership.

My advice is: do not view Claude Code as a programming tool, but rather as a practice ground for collaborating with future AI agents. Learn how to effectively express intentions, how to evaluate AI outputs, and how to fully leverage AI's autonomy while maintaining human oversight. These skills will become crucial in the upcoming era of AI agents. Most importantly, keep an open mind, as the speed of change may exceed all our imaginations.

Finally, I want to say: if you are still hesitating about whether to try Claude Code, do not think of it as learning a new tool, but rather as experiencing the possibilities of collaborating with superintelligence. This experience may be closer to the future norm than we imagine. When historians look back on this era, they may say: the arrival of superintelligence was so quiet that the people of the time did not realize they were witnessing a historical turning point. And Claude Code may be the earliest and most obvious signal of that.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。